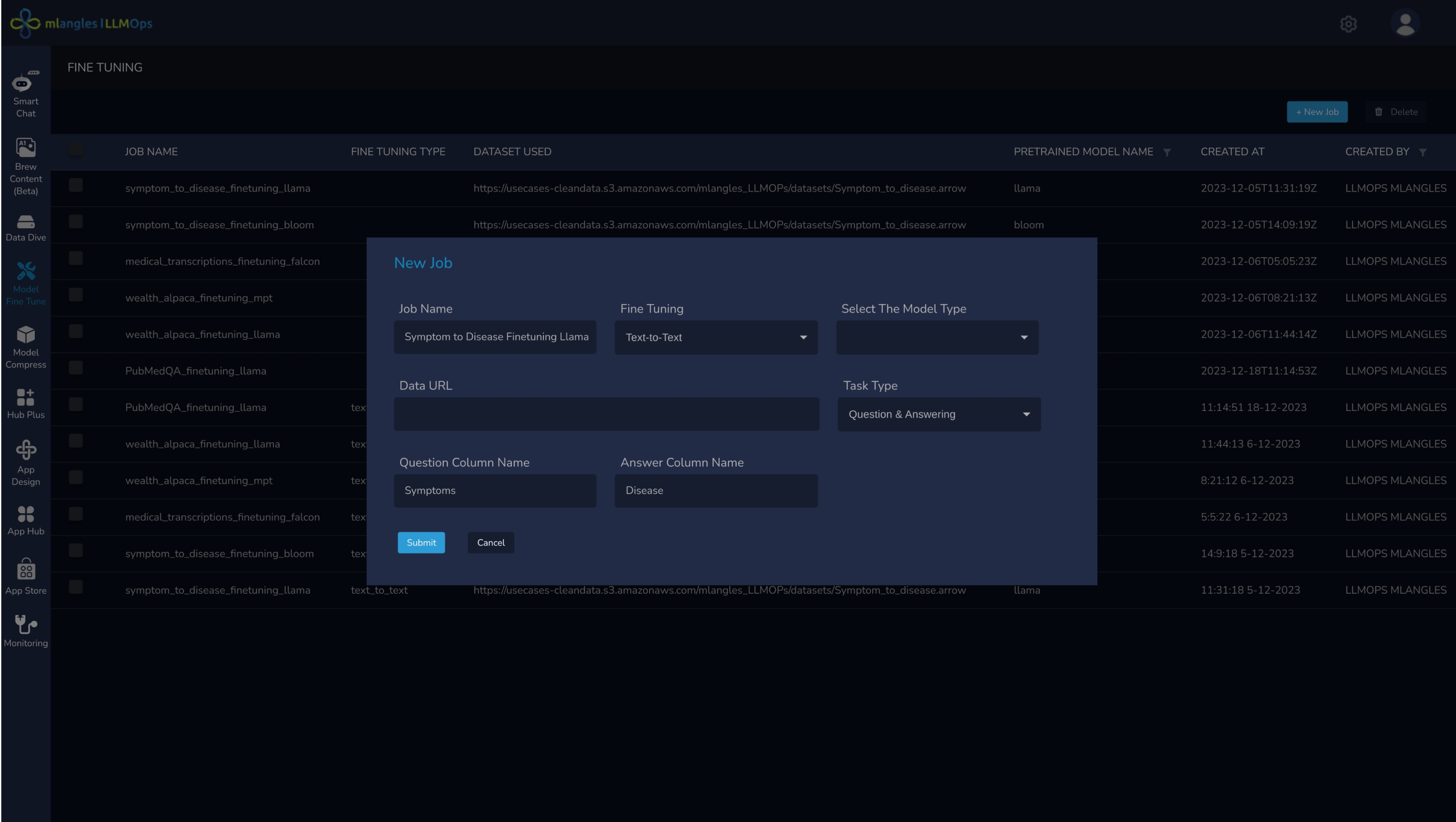

Finetuning LLMs

Automate Data Cleaning and Preparation

mlangles enables you to finetune LLMs to fit your AI use case, utilizing techniques like LoRA and other efficient fine-tuning methods. Our platform accommodates various sample sizes, features, and automated preprocessing steps, giving you the flexibility to explore a wide range of model tuning methods.

Finetuning LLMs

Model Compression

Quantize and compress your fine-tuned LLMs

Optimize your AI model for faster performance and lower resource consumption with advanced compression techniques. mlangles supports model compression methods such as pruning and quantization, allowing you to reduce model size without sacrificing accuracy. Whether you're deploying models on edge devices or cloud infrastructures, our platform ensures efficient use of computational resources while maintaining high performance.

Model Compression

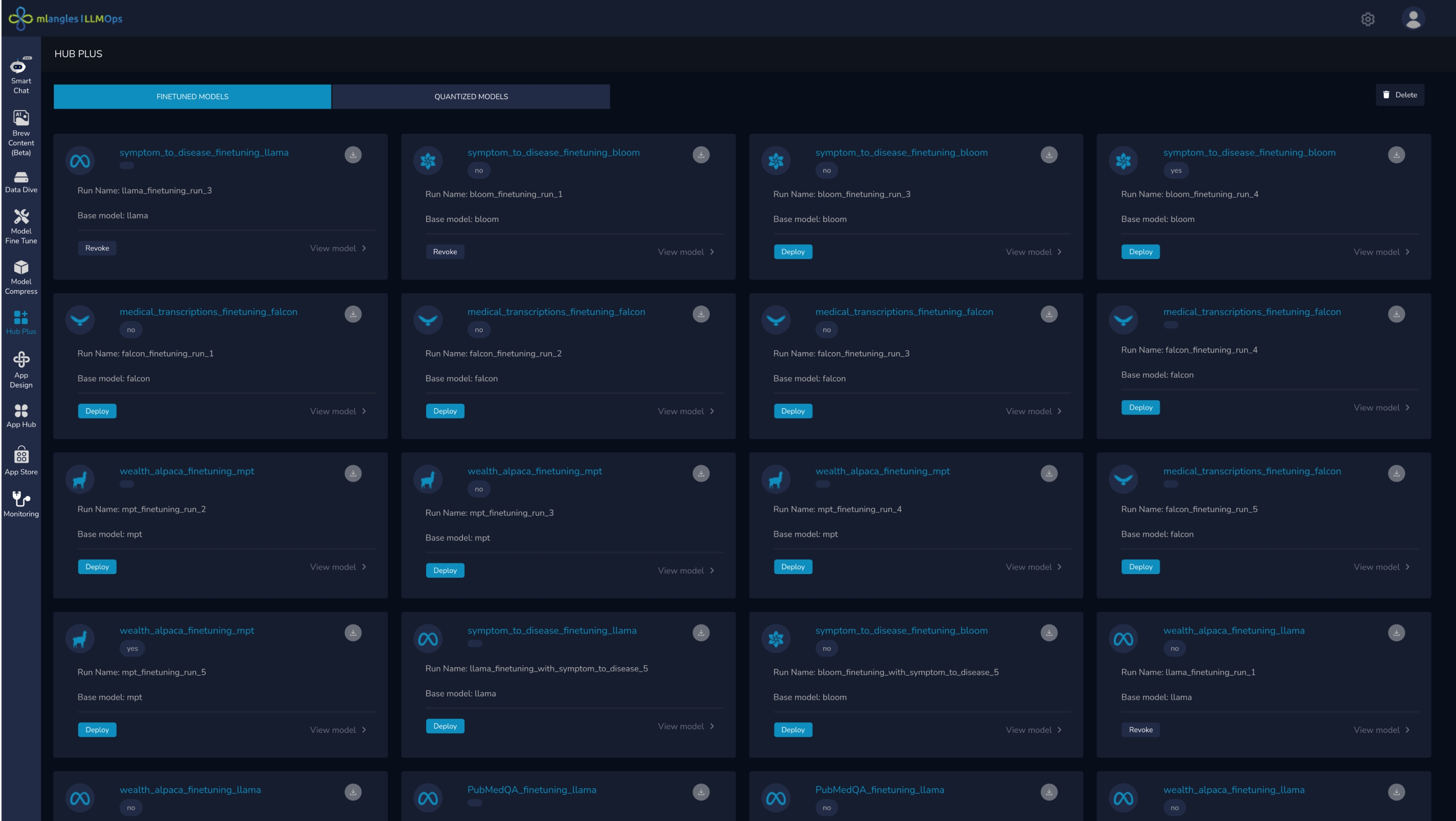

Hub Plus

Package and deploy your models directly from Hub Plus

mlangles Hub Plus makes it effortless to package and deploy your AI models, in just a few clicks. Hub Plus streamlines the deployment process, allowing you to containerize your models and deploy them across various environments, from on-premises setups to cloud platforms. With robust version control, automated scaling, and integration capabilities, Hub Plus ensures that your models are production-ready and optimized for real-time performance. Stay agile as you manage, monitor, and update your AI models seamlessly, empowering your teams to focus on innovation rather than infrastructure.

Hub Plus